| CEN4020: Software Engineering II |

up↑

|

Software Testing Techniques & Strategies

These lecture notes have been assembled from a variety of

sources, over several offerings of software engineering courses.

The primary source is a collection of notes on Software Testing Techniques

posted at

http://www.scribd.com/doc/8622037/Software-Testing-Techniques.

They contain portions of copyrighted materials from various

textbook and web sources, collected here for classroom use under

the "fair use" doctrine. They are not to be published or

otherwise reproduced, and must be maintained under password

protection.

Use "a" key to toggle between slide-at-a-time and

single-page modes. Use the space bar or right arrow for next

slide,

left arrow to back up, and "c" key for table of

contents.

Software Testing

"Testing is the process of exercising a program

with the specific intent of finding errors, prior to delivery

to the end user."

Roger Pressman, in Software Engineering: A Practitioner's Approach

Purpose: uncover as many errors as feasible

- Not prove the system is error-free

Verification & Validation

- Testing is one of several Software Quality Assurance (SQA) activities

- Verification = "Are we building the product right?"

Are specific functions implemented correctly (according to specs)

- Validation = "Are we building the right product?"

Are specs and functions traceable to customer requirements?

What Testing Can Do

- Find errors/defects

- Check conformance (or non-conformance) to requirements

- Measure performance

- Provide an indication of quality

- Number of defects found

- Adherence to standards

What Testing Cannot Do

- Can never prove the absence of defects

- Cannot create quality that was not designed and built in

- Problems discovered in testing may be too late to fix

Software Qualify Assurance

- Goes beyond testing

- Spans the entire development process

- Includes requirements definition,

software design, coding, source code control, code reviews, change management, configuration management, release management, product integration

- Is specialization with its own certification (CSQE) exam

SQA Complements to Testing

- Informal techniques

- Formal methods

Informal SQA Techniques

- Feasibility study

- Technical reviews/walkthroughs (of documents, code)

- Quality and configuration audits

- Database review

- Algorithm analysis

Formal methods

- Formal specification + proof of correctness

- Can catch errors missed in testing and reviews

- Can only prove correctness relative to a formal model and specification

- Errors are still possible

- In the proof (just as in implementation code)

- In ways that real system does not match formal model

- In mis-match between specification and customer requirements

Formal methods form a valuable complement to testing, but not a

replacement.

Testability Characteristics of Software

- Operability — it operates cleanly

- Observability — the results of each test case are readily observed

- Controllability — testing can be automated and optimized

- Decomposability — testing can be targeted to specific modules

- Simplicity — reduce complex architecture and logic to simplify tests

- Stability — few changes are requested during testing

- Understandability — of the design

Designing for testability can make testing easier and more

effective.

Need for Early Testing

Need for Independent Testers

- Developer

- Has insight into the code

- Driven by delivery schedule

- Likely to not test thoroughly enough

- Likely to repeat own errors

- e.g., mis-read requirements

- Independent Tester

- Must study the code and the requirements, from scratch

- Driven by quality

- Will attempt to break the software

- Will interpret requirements literally

Developers may and should do unit tests.

Other testing should be done by independent testers.

This does not imply that independent testing should be done

in place of testing by the development group. On the

contrary, both are necessary. Moreover, when independent testers

are used they need to be monitored closely to ensure the quality

of their work. Independent testers have been known to go through the

motions of testing, without doing a through job of finding bugs.

In contrast, good programmers write very thorough tests of

their own code. Generally, knowledge of the code (whitebox

testing) can be helpful in finding certain kinds of errors, but

blackbox testing is equally valuable. Both should be

done.

Test Planning

- Testing takes place throughout the development cycle

- Plans define a series of tests to be conducted

- Each test has specific objective and describes test cases to examine

- Tests should be automated (coded) wherever possible

- But some will require humans, following scripts

Testing Artifacts in UP

- Testing model:

- Test cases - what to test

- Test procedures - how tests are conducted

- Test components - automate testing

- Test subsystem packages - provide framework for complex tests

- Test plan

- List of product defects

- Test evaluation

A test case specifies

- What to test (requirements)

- With which inputs

- Expected results

- Test conditions and constraints

Test Case Design

| "Bugs lurk in corners and congregate at boundaries..." Boris Beer |

|

- Objective: to uncover errors

- Criteria: in a complete manner

- Constraint: with a minimum of effort and time

Testing Methods: Views of Box

- Black Box

- White Box

- Grey Box

Black Box Testing

- Tester has no knowledge of internal implementation

- Tests software against specifications

- Using just exported interfaces and behaviors

White/Open/Glass Box Testing

- Tester has full access to the internals of the implementation

- May use private/internal interfaces, to e.g.,

- examine data values

- inject faults

- mutate code

- trace program execution

- run static analysis tools on code

- Can check code coverage of tests

Grey Box Testing

- Tester can read the implementation code

- Can design tests based on knowledge of structure and algorithms

- But does not have run-time access to internals

- Tests limited to exported (user) interfaces

Test Coverage Analysis

- Goal: How well do tests cover the system?

- Kinds of coverage we can check:

- requirements

- use cases (scenarios, paths)

- interfaces (screens, buttons, classes, methods, parameter ranges, options)

- configurations, installations

- load levels

- code (statements, lines, paths, etc.)

White Box Testing Techniques

- API testing - including private API's

- Code coverage

- Fault injection

- Mutation testing

- Static testing

Code Coverage Analysis

Objective: to see how much of the code has been covered by the testing

- Logic errors and incorrect assumptions are inversely proportional to a path's execution probability

- We may believe that a path is not likely or unlikely to be executed; reality may differ

- Typographical errors are random, so untested paths will contain some

What to Cover?

Can define coverage in terms of a control flow graph

- Every path?

- Every node?

- Every edge?

White Box: Exhaustive Path Testing

White Box: Selective Path Testing

Basis Path Set

Basis set = a minimal set of

independent paths that can, in linear combination,

generate all possible paths through the CFG

Idea: Construct a set of test cases that covers a basis set of

control flow paths.

Basis Sets & Cyclomatic Complexity

Fact: a CFG may have many basis sets

Fact: the number of paths in all the basis sets of

a given graph is the same, V(G), is called the Cyclomatic

Complexity of the CFG.

Fact: V(G) = |E| - |N| + p, where

|E| = number of edges

|N| = number of nodes

p = 1 + number of connected components (normally p = 2)

Fact: every edge of a flow graph is traversed by

at least one path in every basis set.

Consequence: covering every edge of a CFG graph

requires no more than V(G) tests. (It sometimes may

be done with less.)

Q: How should we choose a good basis set?

A: One way is to start with what seems to be the most

important path through the code, and use this as the baseline

from which we start to build an independent set of paths.

Simplified Computation of V(G)

- Start with the value 1, and for each decision node N in the

CFG add the branching factor of N.

- Count predicates directly from the source code:

- if, while, etc add one to complexity

- boolean operators add one if they have short-circuit semantics

- switch statements add one for each case-labeled statement (not

the number of labels)

- If CFG is planar, count regions.

There is another way to compute V(G), using an

adjacency (connection) matrix.

Computing V(G) Directly from Code

void complexity6 (int i, int j) { // V(G) = 1

if (i > 0 && j < 0) { // +2

while (i > j) { // + 1

if (i % 2 && j % 2) printf ("%d\n", i); // + 2 = 6

else print ("%d\n", j);

i--;

}

}

}

Other Uses of Cyclomatic Complexity

A software metric that reflects the logical

complexity of code, which can be applied to:

- code development risk analysis

- change risk analysis in maintenance

- test planning

- re-engineering cost and risk

Cyclomatic Complexity

Basis Path Testing

Basis Path Testing

Basis Path Testing Notes

Basis Path Coverage Example

Function supposed to return the number of occurrences

of 'C' is the string begins with 'A', else return -1.

int count(char * string) { // V(G) = 1

int index = 0, i = 0, j = 0;

if (string[index] == 'A') { // +1

L1: index = index + 1;

if (string[index] == 'B') { // +1

j++; goto L1; l}

if (string[index] == 'C') { // +1

i += j; j = 0; goto L1; }

i += j;

if (string[index] != '\0') { // +1 = 5

j = 0; goto L1; }

} else i = -1;

return i; }

Error: The count is incorrect for strings that begin

with the letter 'A' and in which the number of occurrences of

'B' is not equal to the number of occurrences of 'C'.

The commonly used statement and branch coverage testing

criteria can be both satisfied using the following two test cases:

- string = "X" ⇒ returns -1 (correct)

- string = "ABCX" ⇒ returns 1 (correct)

Since V(G) = 5, these tests do not satisfy the basis set

testing criterion.

A set of test cases that satisfy the criterion:

| string | return | pass/fail |

|---|

| "X" | -1 | correct |

| "ABCX" | 1 | correct |

| "A" | 0 | correct |

| "AB" | 1 | incorrect |

| "AC" | 0 | incorrect |

Weak Structured Testing

- does not require a basis set

- uses any set of V(G) paths that covers all the branches

- may not detect as many errors

Control Structure Testing

Typical Errors

- boolean operator error: incorrect, missing, or extra

- boolean variable error

- parenthesis error

- relational operator error

- arithmetic expression error

Control Structure Testing

Condition Testing

- branch testing: cover both branches of every simple condition

- domain testing: cover <, =, and > cases of every comparison

- BRO (Branch & Relational Operator) testing: combines both

Data Flow Testing

- definition - statement in which a variable is given a new value

- use - reference to the value from a particular definition

- D (definition of X at statement S) is live at S'

if there is a path from S to S' along which X is not redefined

- DU chain = [X,S,S'] s.t. value of X defined at S is alive and used at S'

- test strategy: cover every DU chain

This is one of several helpful strategies,

but like the others it is not necessarily complete.

Can you think of a counter-example?

Compilers do flow analysis as part of the code "optimization"

process. The same analysis can be used by test coverage

analysis tools.

Loop Testing

Loop Testing

Coverage for simple n-bounded loop

- skip the loop entirely

- just one pass through the loop

- two passes through the loop

- m passes through the loop, where m < n

- n-1, n, n+1 passes through the loop

Loop Testing: Nested Loops

- start at innermost loop; set all outer loops to their minimum iteration

parameter values

- test the min+1, typical, max-1, and max for the innermost loop,

while holding the outer loops at their minimum values

- move out one loop and set it up as in step 2,

holding all other loops at typical values.

- repeat step 3 until the outermost loop has been tested

Loop Testing: Concatenated Loops

- if the loops are independent of one another, treat each as a simple loop

- otherwise, apply the nested loop approach

Unstructured loops are not amenable to testing based

on loop structure. One can either rewrite them into structured

format, or use some other technique, like basis bath coverage.

Testing tools are very helpful in verifying test

coverage. Some tools work like debuggers or execution profilers,

by adding instrumentation code or breakpoints to the program

under test. This allows one to verify that a given

path has been taken.

Black-Box Testing

|

Goal = good coverage of the requirements and domain model |

|

Black Box Testing Techniques

- Equivalence partitioning

- Boundary value analysis

- Comparison testing

- All pairs testing

- "fuzz" or randomized testing

- Specification-based testing

- Use case testing

Equivalence Partitioning

Equivalence Partitioning

Idea:

- Partition the input space into a small number of equivalence classes

such that elements of the same class are supposed to be "handled in the

same manner"

- Test the program with one element from each class

- Classes are categorized as "valid" or "invalid"

This is also known as input space partitioning.

Examples

- For a range: out of range low (invalid); in range (valid); out of range high (invalid)

e.g., input is 5-digit integer between 10,000 and 99.999

equivalence classes are <10,000, 10,000-99,999, and >99,999

- For a specific value: value low (invalid); value correct (valid); value high (invalid)

- For a member of a set: value in set (valid); value out of set (invalid)

- For a boolean condition: true (valid); value (invalid)

Boundary Value Analysis

- Complements partitioning

- Once equivalence classes are determined, design tests for

all the boundaries of each class

- e.g., if input is a 5-digit integer between 10,000 and 99,999,

choose test cases

00000, 09999, 10000, 99999, 10001

Grocery Store Example

Follow this link

for an example of application of the partitioning method.

Observations

Equivalence partitioning & boundary analysis

- Assume the implementor has implemented the same

partitioning

(has not, e.g., partitioned cases finer)

- May not always capture the real semantics of the application

- But some restrictions are a practical necessity,

since there would be an exponential blow-up of test cases if brute force

testing were applied to all combinations of classes for

each input (not feasible).

Comparison Testing

- Provide two or more implementations

- Compare the behavior of one against the other

- If just used for testing, one implementation can be

very inefficient, but more obviously correct

- If both are efficient, can be extended to fault-tolerant

production system

See test examples in Ada for some tests

that use this technique.

Other Black Box Techniques

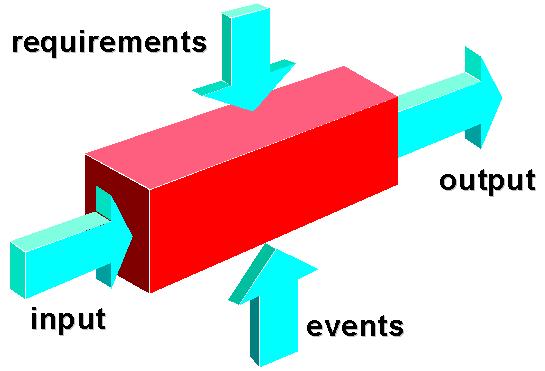

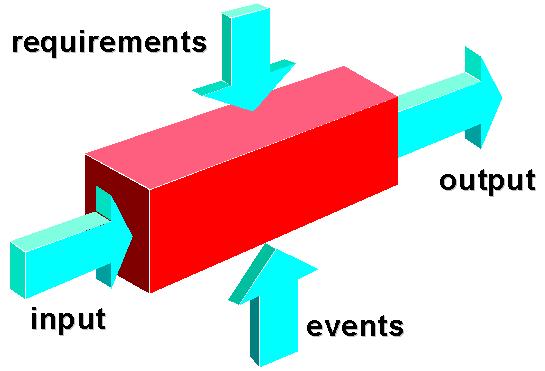

Testing is an "Umbrella Activity"

Remember the V, spiral, and UP models. Testing activities occur

in all phases.

Testing in UP

Testing Levels

- Unit Testing - software modules by themselves

- Integration Testing - how SW modules interact

- Validation Testing - how SW requirements are satisfied

- System Testing - how SW and other systems work together as a complete system

- System Integration - interaction with 3rd-party systems

- Regression Testing - whether changes have broken previous test results *

- Acceptance Testing - whether the job is done

- Alpha testing - by users or test team on developer site

- Beta testing - by users on user site

Progress is bottom-up (smaller to larger scope)

All kinds are necessary

* Regression testing is critical, should be automated, and should

encompasses all types of tests.

Unit Tests

- Focus on a single component (class or method)

- Goal: Does the component live up to its contract?

- Done in implementation workflow of UP, before integration testing

- Typically designed and written by the programmer who writes the component

- Can apply all techniques, including white and black box

- Become part of regression test suite

Unit Testing Rules (Dennis)

- Write the test first

- Test should always fail the first time

- Define the expected output or result

- Use reproducible tests

- Test for invalid or unexpected conditions *

- "Don't test your own programs?" *

- "The probability of locating more errors in a module is

directly proportional to the number of errors already found in that

module" *

* Some of these are intentional over-generalizations.

- You should always, first write some unit tests of your own.

- Don't forget to also test for the expected conditions.

- Even good tests will succeed the first time, if the code is good enough.

- Even good tests will succeed the first time, if you are a good enough programmer.

- Eventually, the rate of error detection should drop, if everyone is doing

their jobs well.

Unit Testing

Unit Test Environment

Unit Test Frameworks

- Standard frameworks

- N-Unit - supplied with Microsoft .NET environment

- J-Unit - free for Java, plugins for most IDEs

- Py_Unit - free for Python

- other frameworks available for other languages

- Alternative: Write your own tests, using project-specific

support framework

Unit Test Examples

See examples in Ada for some tests

that use this technique.

Interface testing

- Takes place when modules or sub-systems are integrated to create larger systems

- Some should also be done before, as part of unit testing

- Objectives are to detect faults due to interface errors or invalid assumptions about interfaces

- Particularly important for object-oriented development as objects are defined by their interfaces

Types of Interfaces

- Parameter interfaces

- Data passed from one procedure to another

- Shared memory interfaces

- Block of memory is shared between procedures

- Procedural interfaces

- Sub-system encapsulates a set of procedures to be called by other sub-systems

- Message passing interfaces

- Sub-systems request services from other sub-systems

Interface errors

- Interface misuse

- A calling component calls another component and makes an error in its use of its interface

e.g. parameters in the wrong order

- Interface misunderstanding

- A calling component embeds assumptions about the behavior of the called component which are

incorrect

- Timing errors

- The called and the calling component operate at different speeds and out-of-date information

is accessed

Interface testing guidelines

- Design tests so that parameters to a called procedure are at the extreme ends of their ranges.

- Always test pointer parameters with null pointers, and junk pointers.

- Design tests which cause the component to fail.

- Include stress testing of message passing systems.

- In shared memory systems, vary the order in which components are activated.

Stress testing

- Pushes the limits - e.g., incorrect usage of module

interfaces (bad or out of range parameters),

large file sizes, large numbers of files, large numbers of

concurrent users, high network traffic loads

- Exercises the system beyond its maximum design load. Stressing the system often causes defects to come to light.

- Stressing the system failure behavior. Systems should not fail catastrophically. Stress testing checks for unacceptable loss of service or data.

- Particularly relevant to distributed systems which can exhibit severe degradation as a network becomes overloaded.

Integration Testing

- Purpose: Do modules (classes) work together correctly, as a group?

- Approaches

- User interface testing

- Use case testing - look at interesting distinct scenarios, avoid overlap

- Interaction testing - look at interaction diagrams as guide

- System interface testing

- Use all approaches

Integration Testing Strategies

Top Down Integration

Bottom-Up Integration

Sandwich Testing

Testing approaches

- Architectural validation

- Top-down integration testing is better at discovering errors in the system architecture

- System demonstration

- Top-down integration testing allows a limited demonstration at an early stage in the development

- Test implementation

- Often easier with bottom-up integration testing

- Test observation

- Problems with both approaches. Extra code may be required to observe tests

Regression Testing

- Goal: detect regressions - bugs introduced by changes, that cause previously

tested functionality to fail

- Ideal: re-run every test, each time code is changed

- Minimum: run "smoke tests"

- Includes both unit and system (functional) tests

- Needs to be done frequently, and so should be automated

- Every bug-fix should include have at least one corresponding regression test, to verify that

the bug stays fixed

System Testing

- Goal: How well does the system meet end user's (business) requirements?

- Installation - Can it be installed to work correctly?

- Configuration testing - tests different configurations

- Recovery testing - forces failures and verifies acceptable recovery

- Security testing - testers attempt to penetrate security

- Negative tests - test outside the intended design (wrong configuration, wrong input)

to reveal design weaknesses

- Stress testing - pushes system resource limits, tries to break it

- Performance testing - response time, throughput, resource usage, etc.

- Usability testing - Can users use it?

- Documentation testing - accuracy, readability, understandability, adequacy

- Internationalization and localization

Acceptance Tests

- Goal: Is the system acceptable to users?

- Used for projects with a single customer,

for formal delivery milestone, prior to payment

- Conducted by end user

Alpha Testing

- Used for projects with many customers

- Conducted by customer, on developers site

- Used in natural setting

- Developer looks over the shoulder of the user

Beta Testing

- Follows alpha

- Conducted at one or more customer sites by customer

- Developer is not present

- Customer records and reports apparent problems

Testing Tools

- Test coverage tools - for white-box testing

- Scripting tools - for automated test generation, running & result checking

- Comparison tools - to compare results of tests with

expected values

- Database, to keep inventory, history, and correlations

- requirements, use cases

- test criteria

- tests

- test results - for comparisons

- failures (what test caught what?)

- corrective actions

- times at which bugs were caught, and fixed

- who did what?

- what was the cause of the problem?

- did we later find out that we had misdiagnosed the problem?

When a correction is made to code, it is a good idea to

include a comment at the location of the patch, indicating the reason

for the change. If this is a bug-fix, one should indicate which

test failures exposed the problem that motivated the fix.

If there is no concise test, one should be written and added

to the regression test set. Later, if someone questions that

part of the code (maybe in fixing another bug), they will know

to look at the relevant regression tests *before* making a new

patch.

Example of Test Assertions

Debugging

- A consequence of testing

- Leads to further testing

Debugging: A Diagnostic Process

Debugging Effort

Symptoms & Causes

Consequences of Bugs

Dangers of Patching

Tests catch defect

Dangers of Patching

Patch enables tests to pass

But may not completely correct defect

Patch may also introduce new defect(s)

Dangers of Patching

More patching makes defects still harder to catch

Also makes code harder to understand

Debugging Approaches

- Brute force

- Backtracking

- Testing

- Cause Elimination

Brute Force

- Dumping variables

- Adding output statements

- Using a "debugger":

- Tracing execution

- Setting breakpoints

- etc.

- Hit-or-miss, no reasoned plan

Backtracking

- Start from point of failure

- Trace flow of control and/or data backward in code

- Hope to locate error

Cause Elimination: Induction

- Locate pertinent data

- Organize the data

- Devise a hypothesis

- Prove the hypothesis

Deduction → The Scientific Method

- Analyze

- Hypothesize a set of possible causes

- Design experiment to test hypothesis

- Go back to step 1 until problem is understood

This has sometimes been called the "wolf fence" approach.

Debugging Tools

- Debuggers: provide trace, breakpoint, state examination, e.g.,

- gdb - Gnu debugger, under Solaris and Linux

- dbx - interactive debugging, under Solaris

- adb - an assembly-language-level debugger, under Solaris

- xxgdb - a GUI for gdb, under Linux

- Tracing tools, e.g.

- strace - system call tracing, under Solaris and Linux

- ctrace - line-by-line program execution tracing, under Solaris

- ltrace - dynamic library call tracing, under Solaris and Linux

- Dynamic memory usage checkers: cat allocation, deallocation,

initialization, alignment errors, e.g.,

- memdb & gcmonitor - the Workshop Memory Monitor, under Solaris

- efence - Electric Fence debugger for malloc problems, under Linux

- NJAMD - Not Just Another Malloc Debugger, under Linux

- Compiler warning messages, e.g., gcc "-pedantic", "-Wall"

Design for Debugging

- Assertions & other constraint/consistency checks

- Logging

- State-querying functions

- State-dumping functions

- Debugging switches (compile time or run time)

Debugging Cautions

- Is this the only place the error occurs in the program?

Usually, the faulty logic that led to the error will crop up repeatedly

in the code.

- What side-effects will the patch have?

Will it introduce new bugs?

- What could have been done to prevent this bug,

or catch it earlier?

Debugging: Final Thoughts

- Don't run off half-cocked; think about the symptom you are seeing.

- Use tools (e.g., dynamic debugger) to gain more insight.

- If at an impasse, get help from someone else.

- Be absolutely sure to conduct regression tests when you

do "fix" the bug.

Object-Oriented Testing

- Begins by evaluating the correctness and consistency of the OOA and OOD models

- Testing strategy changes

- The concept of the "unit" broadens due to encapsulation

- Integration focuses on classes and their execution across a "thread" in the context of a

usage scenario

- Validation uses conventional black box methods

- Test case design draws on conventional methods, but also encompasses special features

Broadening the View of "Testing"

It can be argued that the review of OO analysis and design

models is especially useful because the same semantic

constructs (e.g. classes, attributes, operations, messages)

appear at the analysis, design, and code level. Therefore, a

problem in the definition of class attributes that is uncovered

during analysis will circumvent side effects that might occur

if the problem were not discovered until design or code (or

even the next iteration of analysis).

Testing the CRC Model

- Revisit the Class-Responsibility-Collaboration and object-relationship models

- Inspect the description of each CRC index card to determine if

a delegated responsibility is part of the collaborator's

definition.

- Invert the connection to ensure that each collaborator that is

asked for service is receiving requests from a reasonable

source.

- Using the inverted connections examined in step 3, determine

whether other classes might be required or whether

responsibilities are properly grouped among the classes.

- Determine whether widely requested responsibilities might be

combined into a single responsibility.

- Steps 1 to 5 are applied iteratively to each class and through

each evolution of the OOA model.

OOT Strategy

- Unit testing is at the level of classes

- Operations within the class are tested

- The state behavior of the class is examined

- Integration applied three different strategies

- Thread-based testing - integrates the set of classes required to respond to one input or event

- Use-based testing - integrates the set of classes required to respond to one use case

- Cluster testing - integrates the set of classes required to demonstrate one collaboration

OOT - Test Case Design

Berard proposes the following approach:

- Each test case should be uniquely identified and should be explicitly associated with the class to be tested.

- The purpose of the test should be stated.

- A list of testing steps should be developed for each test and should contain:

- A list of specified states for the object that is to be tested

- A list of messages and operations that will be exercised as a consequence of the test

- A list of exceptions that may occur as the object is tested

- A list of external conditions (i.e. changes in the environment external to the software that must

exist in order to properly conduct the test).

- Supplementary information that will aid in understanding or implementing the test

OOT Methods: Random Testing

- Identify operations applicable to a class

- Define constraints on their use

- Identify a minimum test sequence

- An operation sequence that defines the minimum life history of the class (object)

- Generate a variety of random (but valid) test sequences

- Exercise other (more complex) class instance life histories

OOT Methods: Partition Testing

- Reduces the number of test cases required to test a class in much the same way as equivalence

partitioning for conventional software

- State-based partitioning

- Categorize and test operations based on their ability to change the state of a class

- Attribute-based partitioning

- Categorize and test operations based on the attributes that they use

- Category-based partitioning

- Categorize and test operations based on the generic function each performs

OOT Methods: Inter-Class Testing

- For each client class, use the list of class operators to generate a series of random test

sequences. The operators will send messages to other server classes.

- For each message that is generated, determine the collaborator class and the corresponding

operator in the server object.

- For each operator in the server object (that has been invoked by messages sent from the client

object), determine the messages that it transmits.

- For each of the messages, determine the next level of operators that are invoked and incorporate

these into the test sequence

Testing levels

- Testing operations associated with objects

- Testing object classes

- Testing clusters of cooperating objects

- Testing the complete OO system

Object class testing

- Complete test coverage of a class involves

- Testing all operations associated with an object

- Setting and interrogating all object attributes

- Exercising the object in all possible states

- Inheritance makes it more difficult to design object class tests as the information to be tested is not localized

Object integration

- Levels of integration are less distinct in object-oriented systems

- Cluster testing is concerned with integrating and testing clusters of cooperating objects

- Identify clusters using knowledge of the operation of objects and the system features that

are implemented by these clusters

Approaches to cluster testing

- Use-case or scenario testing

- Testing is based on a user interactions with the system

- Has the advantage that it tests system features as experienced by users

- Thread testing

- Tests the systems response to events as processing threads through the system

- Object interaction testing

- Tests sequences of object interactions that stop when an object operation does not call on services

from another object